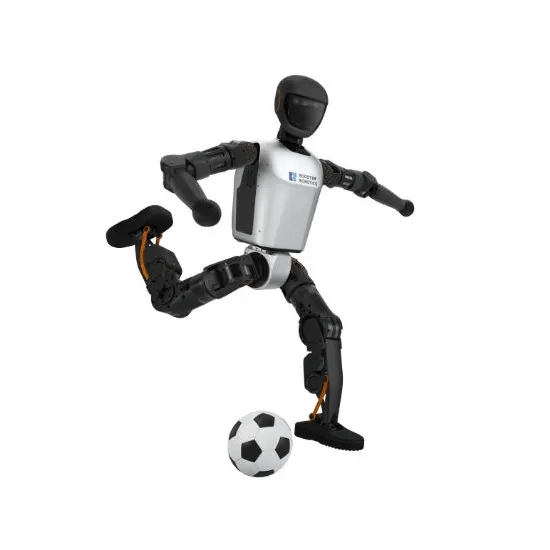

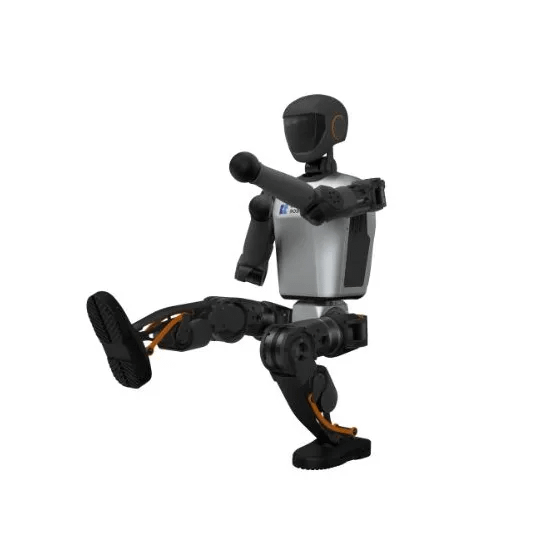

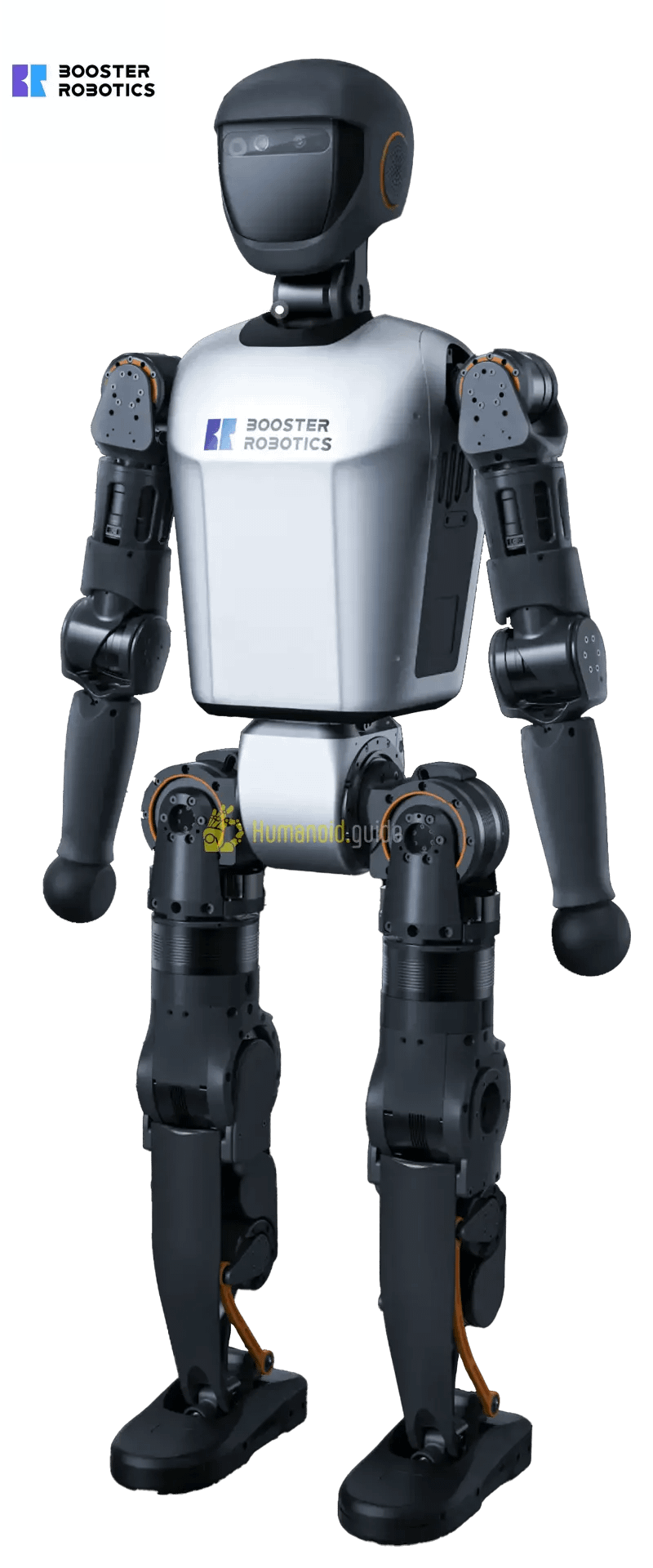

Booster T1 is an agile, open-source humanoid platform built for developers and researchers. Standing approximately 1.2 m tall with 23 joints, it features omnidirectional walking, powerful AI onboard via NVIDIA Jetson AGX Orin, and full support for ROS2 and simulation tools—making it an adaptable, high-mobility robotics foundation.

Product Description

The Booster T1 is a developer-focused humanoid robot designed for research, education, and advanced robotics projects. It combines agile locomotion, powerful onboard AI processing, and an open architecture for rapid innovation.

Key Features:

-

Lightweight, Durable Build – About 1.2 m tall, impact-resistant, and highly agile.

-

Configurable Degrees of Freedom – 23 DoF standard, expandable to 31 or 41 for advanced manipulation.

-

High-Performance AI – NVIDIA Jetson AGX Orin with up to 200 TOPS for real-time perception and control.

-

Full Sensor Suite – RGB-D camera, 9-axis IMU, microphone array, and speaker for navigation and interaction.

-

Omnidirectional Motion – Smooth walking in all directions with precise joint control.

-

Wide Software Compatibility – ROS2 support and integration with leading simulation platforms.

-

Mobile & Edge AI Capabilities – Bluetooth/app control, speech/audio functions, and object detection.

-

Competition-Proven – Demonstrated excellence in RoboCup with wins in navigation, racing, and tasks.

-

Reinforcement Learning Support – Booster Gym framework for real-to-sim adaptability.

-

Efficient Power – Up to 2 hours walking or 4 hours standing runtime.

Mobility & Structure

Attribute

Value

Description

Why It Matters

Locomotion Type

Bipedal, wheeled-hybrid, etc.

Defines interaction envelope

Degrees of Freedom (DOF)

# of controllable joints

Directly tied to dexterity & versatility

Max Walk Speed

m/s

Static, dynamic balancing

Upper Body & Manipulation

Attribute

Value

Description

Why It Matters

Arm DOF

Each arm's axis count

Higher = more natural movement

Hand Dexterity

Pinch / 3-finger / 5-finger

Newtons

Grip Feedback

Force, pressure, tactile sensors

Enables delicate handling and learning

Perception

Attribute

Value

Description

Why It Matters

Visual Sensors (Eyes)

RGB / Depth / IR / LiDAR

Navigation, facial recognition, object tracking

Eye DOF / Movement

Can they move? (Y/N, axis count)

Naturalistic interaction & gaze tracking

Audio Sensors (Ears)

# of mics + array type

Needed for voice commands, sound source localization

Face Display / Actuators

LED screen / moving eyebrows / static

Social communication, emotional response

Face Expressiveness

None / Basic / Realistic

Higher = better interaction in social spaces

Interfaces

Attribute

Value

Description

Why It Matters

Voice Recognition

NLP model + languages supported

Enables natural user interaction

Speech Output

TTS engine used + quality level

Critical for public/commercial use

Touch UI / Screen

Present or not

Optional fallback interaction

Remote Control

N/A

Web / App / Joystick

Needed for manual override or remote training

Similar products

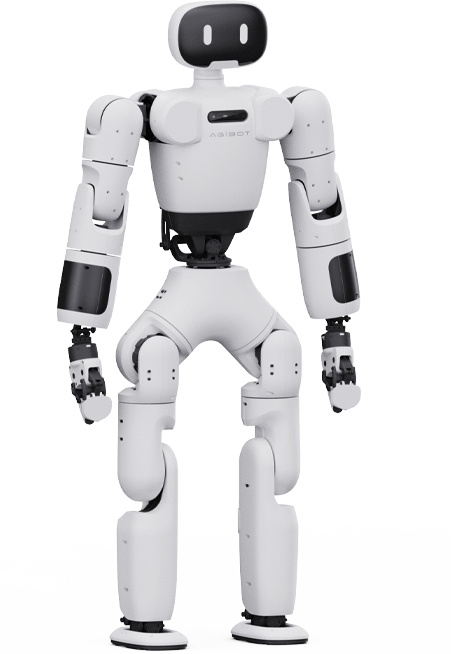

Zhongqing PM01 Humanoid Robot

The Zhongqing PM01 is a versatile humanoid robot...

SKU:

Booster T1

The Booster T1 is an open-source humanoid robot...

SKU:

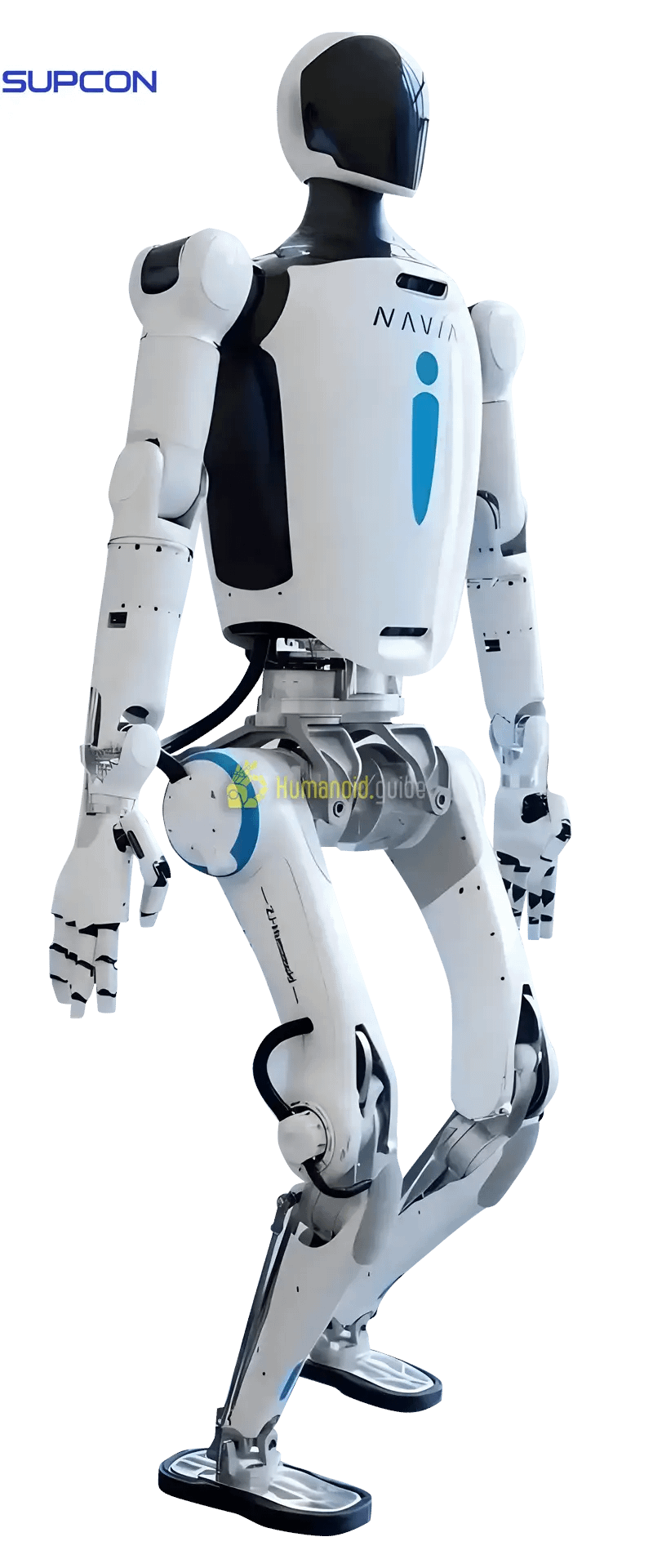

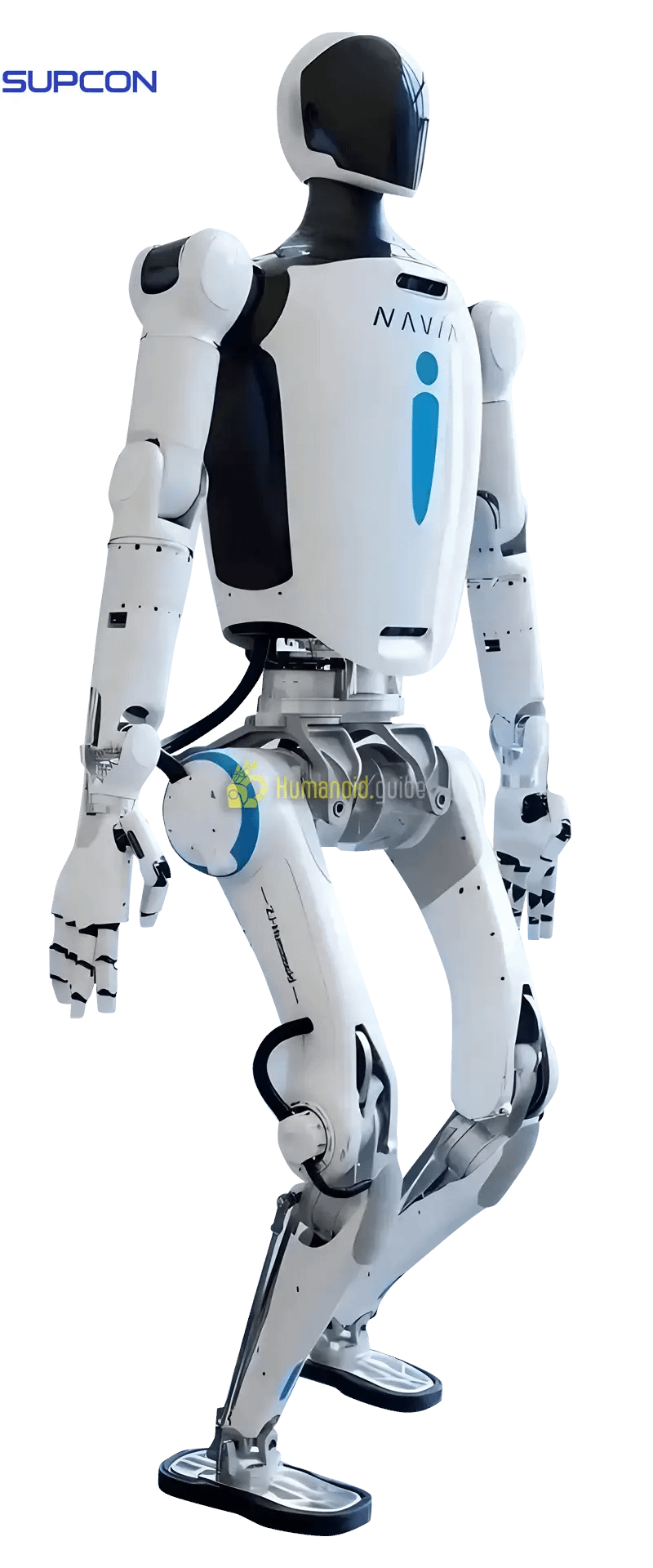

Navigator No. 2 NAVIAI

NAVIAI Navigator 2 is a humanoid robot with...

SKU:

Lingxi X1

The Lingxi X1 is an open-source humanoid robot...

SKU:

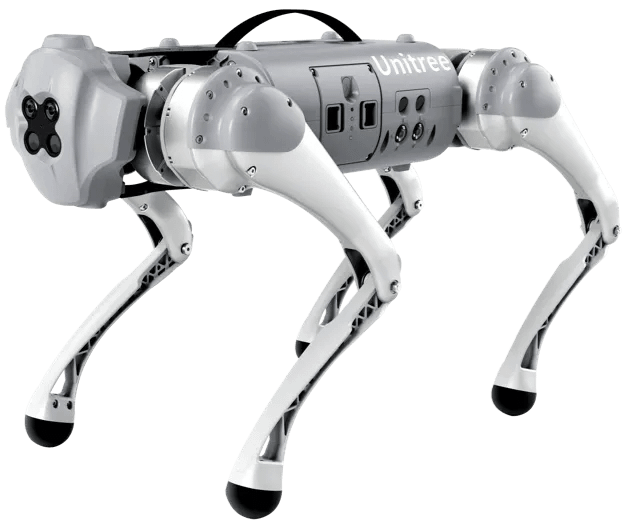

GO1 Quadruped Robot Head

The GO1 Quadruped Robot Head equips your quadruped...

SKU:

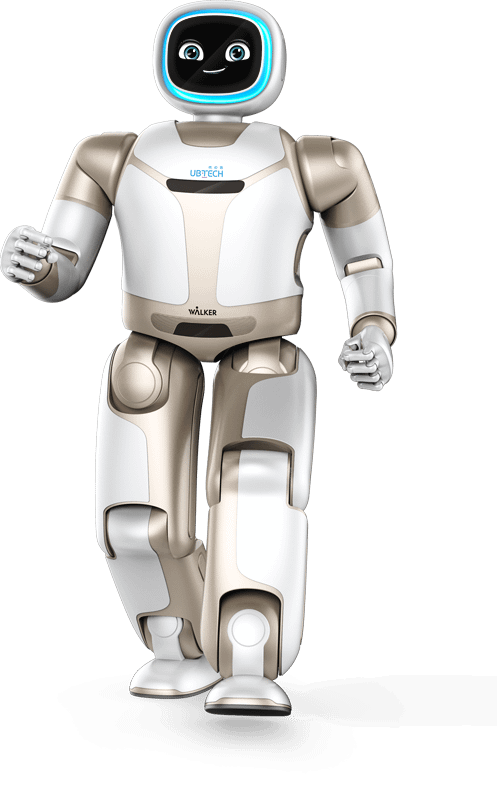

Walker

Agile bipedal humanoid robot with advanced mobility and...

SKU:

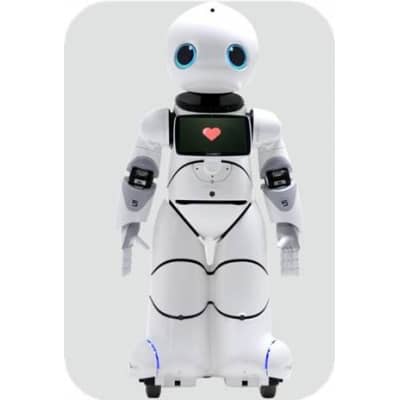

Youyou Robot

Advanced humanoid robot with multi-sensor perception and lifelike...

SKU:

Astribot S1

The Astribot S1 is an intelligent autonomous robot...

SKU:

Navigator II NAVIAI

Navigator II NAVIAI is a full-size legged humanoid...

SKU:

AEON humanoid robot

AEON is an industrial-grade humanoid robot engineered by...

SKU: